VQVAE - Generative Model for Brain Images

The models are trained on the Alzheimer’s Disease Neuroimaging Initiative (ADNI) dataset. This consists of MRI of the brain for selected patients, with the resulting data labelled as Cognitive Normal (NC) and those with Alzheimer’s Disease (AD).

The VQ-VAE model consists of an encoder, decoder and an added vector quantisation layer. The encoder network parameterises the distribution of the data to convert it to an encoding vector, with each dimension a learned attribute of the data. The vector quantiser then discretises this encoding vector from continuous to produce a discrete latent representation. The decoder then reconstructs the data from the discrete latent representation. The vector quantiser is trained to minimise the distance between the input and output of the encoder and decoder, and the encoder and decoder are trained to minimise the distance between the input and output of the vector quantiser. The loss function for training the VQVAE is the sum of the embedding loss and reconstruction loss.

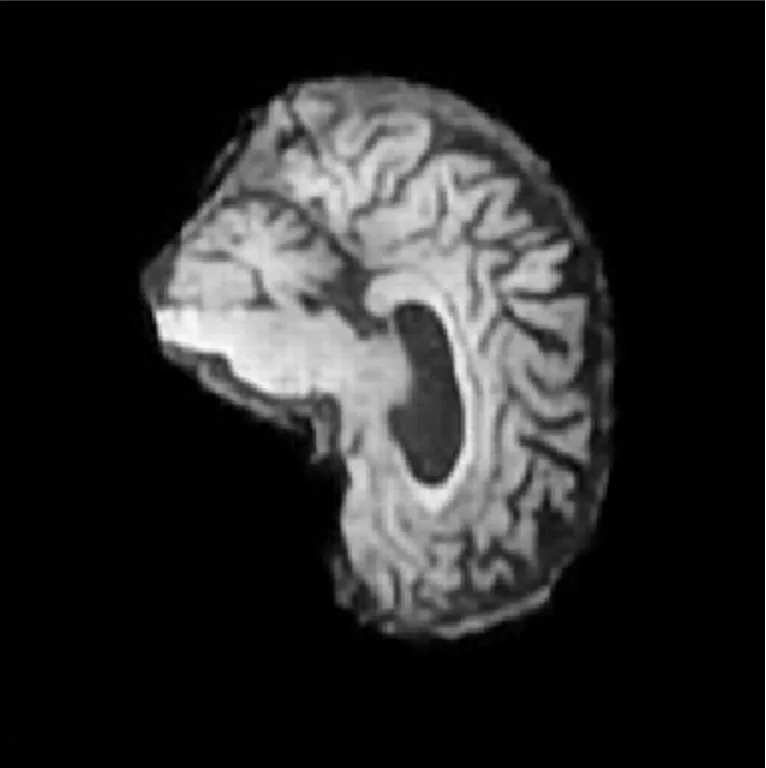

The network achieved an average similarity across the unseen test split of 0.62, indicating that the fake images were similar to the real images. The model was also able to generate images of both classes, with the generated images of the AD class being more similar to the real images than the NC class. This is likely due to the AD class having a larger number of images in the dataset, and hence the model has more data to learn from.